What is multiple comparison

Acutally, I think the comic from xkcd explains multiple comparison problem breifly and precisely

In one sentence

The more the same experiment we do, the most likely to get some extremely results.

and it will become more possible to reject H0.

However, in some companies, they need to do the same experiment over and over again at the same time to make sure, for example, the users love the blue button, not other 300+ colors.

How to deal with multiple testing

Bonferroni Method

If H0 is more likely to be rejected, then we just need to lower our threshold of rejecting H0, and that's it.

The threshold of rejecting H0 is called alpha, and usually the alpha is set to 0.05. When there is m tests, we need to decrease alpha to alpha/m.

This method is called Bonferroni Method. This is most simple method.

However, since this method will make new alpha too small, there are other ways to deal with the problem.

Tukey method

This method has lots of name, such as Tukey's range test and Tukey's HSD.

Most of time, we do AB testing is to compare whether A or B is better, and in general cases, we just use t-test to do it.

In statistical testing, the other way to reject H0 is using critical value. For example, in t-test, we will calculate a statistic value called t-value. To reject H0, you need to make t-value larger or smaller than critical value, depends on what kind of test you are doing.

The critical value is the other form of alpha, and that's it. Just like Bonferroni Method, we change critical value from t distribution to studentized range distribution (q).

Moreover, q=sqrt(2)t, t statistic and q have relationship, but you do not need to calculate the critical value by hand, there are lots of calculator online for free.

Benjamini–Hochberg procedure

Since we faced the problem of "multiple" comparison problme, why we insist to give the same threshold all of the tests?

Here, we decide to compare each p-value with different alpha, each alpha become (k/m)alpha, where k means the order of p-value, k=1 is smallest and vice versa. More precisely, we want to find the smallest p-value which is bigger than threshold.

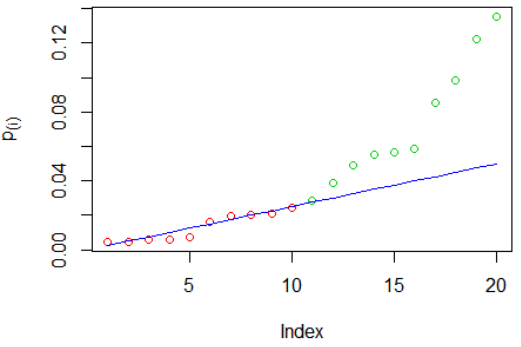

Once we find that special p-value, all of the H0 above this p-values are rejected,just like the following picture.

The y-axis is p-value, the blue line is the threshold, the red dots are the tests not rejected H0, and the green dots are the tests rejected H0. So you can see the only thing we need to find out is the first green dot.

Conclusion

Actually, multiple comparison problem is the huge area in statistics, and there are lots of lots of researchers want to find out the best way to deal with this problem.

In this article, I tried my best to describe as simple as possible. The actual description of those methods are far more "mathematical", and it includes lots of technical terms, such as family wise error rate and false discovery rate. If you are interested, wikipedia has more detailed and complete explanation.

I hope you enjoy this article.

![[Week 1] Command Line 入門 & 基本指令](https://static.coderbridge.com/img/heidiliu2020/a2123be4f0354c858ab3c3973f9f9deb.jpg)